AI: The Next Programming Paradigm

We explore the premise that using Artificial Intelligence (LLM) is the next evolution of programming languages into natural language, and how that's no different from any other abstraction.

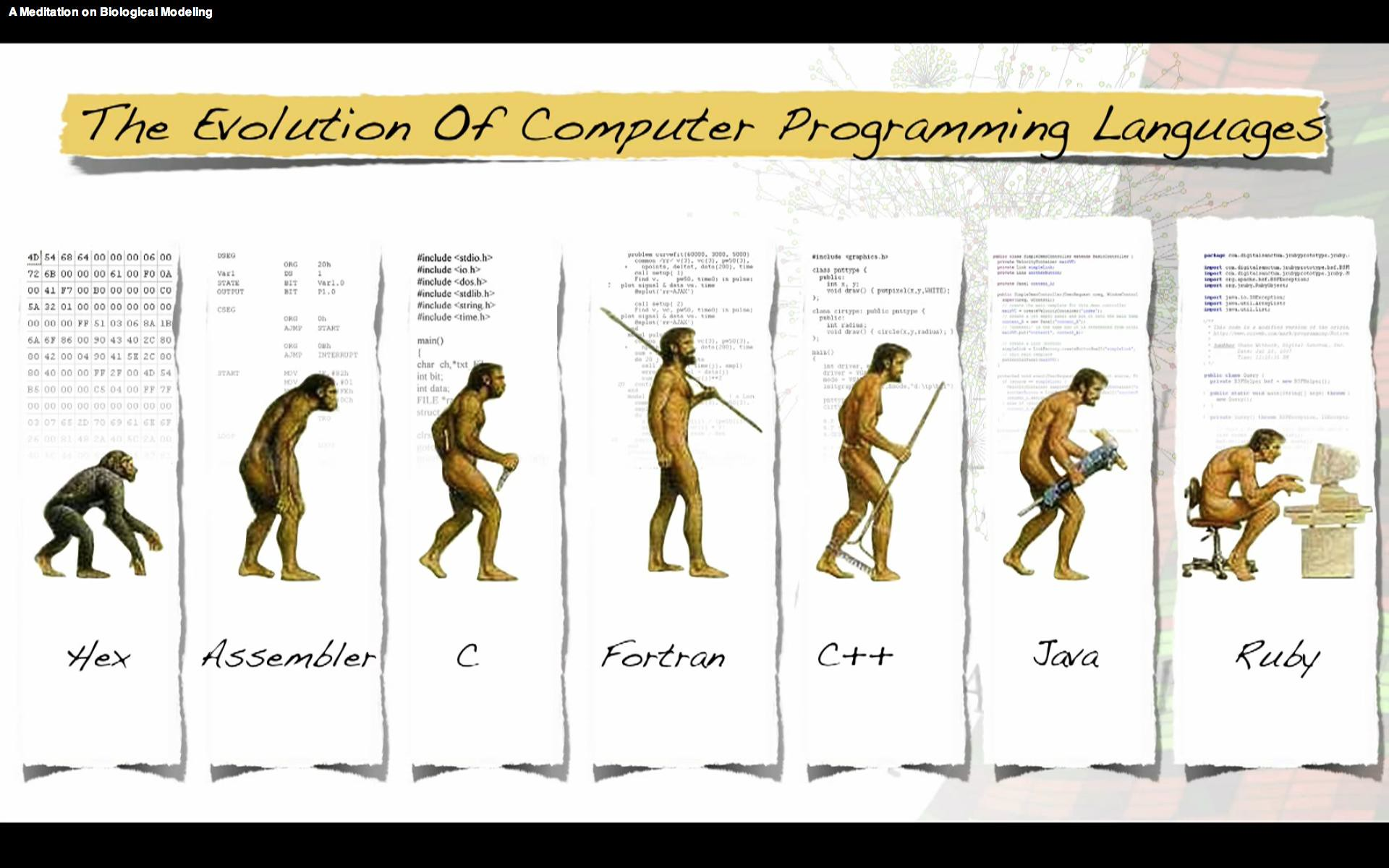

The Evolution of Programming Languages

In the early days of computing, programming meant direct manipulation of binary strings called machine code (e.g. 10110000 01100001), so intelligible... Soon after, assembly language offered a slight improvement with mnemonic codes such as MOV AL, 61h, bringing a hint of human-readability to the mix.

Magically, like out of a European fairy tale, came higher-level languages like C, Java, Python and others. These provided significantly more abstraction, allowing developers to focus on logic and structure rather than register manipulation and memory addressing. Each new paradigm aimed at one goal: to make coding a coffee machine much more intuitive, or at least programming in general (Sebesta, 2016).

Natural Language as the Next Step

Enter the advent of tenable Large Language Models (LLMs), such as GPT-4. These systems interpret and generate natural language with astonishing capability. They are not just chatbots—they are engines of translation, capable of converting human intent into somewhat executable code.

The history of programming shows a clear direction:

- Machine Code:

10110000 01100001 - Assembly Language:

MOV AL, 61h - High-Level Languages:

print("Hello, World!") - Natural Language: "Display 'Hello, World!' on the screen"

Each stage of progression shows a very clear path towards abstraction from low-level coding into higher-level coding. The argument here is that natural language is not merely a leap; it's the next logical step in the natural chain of progression for programming.

LLMs as Programming Interfaces

LLMs function like a compiler, interpreter, and even a pair programmer rolled into one. They can turn prompts like "Write a Python function to calculate factorials" into working code. They're not magic, but they are close to an inflection point in software tooling.

This paradigm offers clear advantages:

- Accessibility – People without formal training can contribute to codebases.

- Speed – Developers can move from idea to prototype in minutes.

- Collaboration – Business stakeholders can express logic in words, not pseudo-code.

This isn’t the death of coding, oh no no no... It’s simply the natural evolution of it (Chen et al., 2021).

Challenges and Considerations

Despite the promise, natural language programming has obvious drawbacks:

- Ambiguity – Human language is imprecise. "Show all users" might mean different things depending on context and individual interpretation.

- Context Depth – LLMs operate on snapshots of information. Complex logic chains can get lost without enough cues.

- Skill Degradation – As with calculators and mental arithmetic, reliance on AI may erode foundational knowledge.

We must treat LLMs as accelerators, not autopilots. Domain expertise still matters, and so does understanding what the machine is really doing.

The Future of Programming

Natural language programming isn’t about replacing coders, it’s about enhancing their capabilities and improving upon the value that they offer. It enables more people to contribute to the development process, shortens feedback loops, and bridges the gap between intention and execution.

Programming is becoming more reflective of how we think and communicate. We see it now, code is something we write with AI instead of typing line by line like a shmuck.

Natural language is a new interface layer in our continued quest to make computing more human.

References

Chen, M., Tworek, J., Jun, H., Yuan, Q., de Oliveira Pinto, H.P., Kaplan, J., Edwards, H., Burda, Y., Joseph, N., Brockman, G., Ray, A., Puri, R., Krueger, G., Petrov, M., Khlaaf, H., Sastry, G., Mishkin, P., Chan, B., Gray, S., Ryder, N., Pavlov, M., Power, A., Kaiser, Ł., Bavarian, M., Winter, C., Tillet, P., Such, F.P., Cummings, D., Plappert, M., Chantzis, F., Barnes, E., Herbert-Voss, A., Guss, W.H., Nichol, A., Paino, A., Tezak, N., Tang, J., Babuschkin, I., Balaji, S., Jain, S., Saunders, W., Hesse, C., Carr, A.N., Leike, J., Achiam, J., Misra, V., Morikawa, E., Radford, A., Knight, M., Brundage, M., Murati, M., Mayer, K., Welinder, P., McGrew, B., Amodei, D., Ramesh, A., Ziegler, D.M., Brown, T.B. and Sutskever, I., 2021. Evaluating large language models trained on code. arXiv preprint arXiv:2107.03374. Available at: https://arxiv.org/abs/2107.03374.

Sebesta, R.W., 2016. Concepts of Programming Languages. 11th ed. Boston: Pearson.